New Grads, Meet New Metrics: Why Early Career Librarians Should Care About Altmetrics & Research Impact

Photo by Flickr user Peter Taylor (CC BY-NC 2.0)

In Brief

How do academic librarians measure their impact on the field of LIS, particularly in light of eventual career goals related to reappointment, promotion, or tenure? The ambiguity surrounding how to define and measure impact is arguably one of the biggest frustrations that new librarians face, especially if they are interested in producing scholarship outside of traditional publication models. To help address this problem, we seek to introduce early career librarians and other readers to altmetrics, a relatively new concept within the academic landscape that considers web-based methods of sharing and analyzing scholarly information.

By Robin Chin Roemer and Rachel Borchardt

Introduction

For new LIS graduates with an eye toward higher education, landing that first job in an academic library is often the first and foremost priority. But what happens once you land the job? How do new librarians go about setting smart priorities for their early career decisions and directions, including the not-so-long term goals of reappointment, promotion, or tenure?

While good advice is readily available for most librarians looking to advance “primary” responsibilities like teaching, collection development, and support for access services, advice on the subject of scholarship—a key requirement of many academic librarian positions—remains relatively neglected by LIS programs across the country. Newly hired librarians are therefore often surprised by the realities of their long term performance expectations, and can especially struggle to find evidence of their “impact” on the larger LIS profession or field of research over time. These professional realizations prompt librarians to ask what it means to be impactful in the larger world of libraries. Is a poster at a national conference more or less impactful than a presentation at a regional one? Where can one find guidance on how to focus one’s efforts for greatest impact? Finally, who decides what impact is for librarians, and how does one go about becoming a decision-maker?

The ambiguity surrounding how to both define and measure impact quantitatively is a huge challenge for new librarians, particularly for those looking to contribute to the field beyond the publication of traditional works of scholarship. To help address this problem, this article introduces early career librarians and LIS professionals to a concept within the landscape of academic impact measurement that is more typically directed at seasoned librarian professionals: altmetrics, or the creation and study of metrics based on the Social Web as a means for analyzing and informing scholarship ((There are in fact many extant definitions of altmetrics (formerly alt-metrics). However, this definition is taken from one of the earliest sources on the topic, Altmetrics.org. )). By focusing especially on the value of altmetrics to early career librarians (and vice versa) we argue that altmetrics can and should become a more prominent part of academic libraries’ toolkits at the beginning of their careers. Our approach to this topic is shaped by our own early experiences with the nuances of LIS scholarship, as well as by our fundamental interest in helping researchers who struggle with scholarly directions in their fields.

What is altmetrics & why does it matter?

Altmetrics has become something of a buzzword within academia over the last five years. Offering users a view of impact that looks beyond the world of citations championed by traditional metric makers, altmetrics has grown especially popular with researchers and professionals who ultimately seek to engage with the public—including many librarians and LIS practitioners. Robin, for instance, first learned of their existence in late 2011, when working as a library liaison to a School of Communication that included many public-oriented faculty, including journalists, filmmakers, and PR specialists.

One of the reasons for this growing popularity is the narrow definition of scholarly communication that tends to equate article citations with academia impact. Traditional citation metrics like Impact Factor by nature take for granted the privileged position of academic journal articles, which are common enough in the sciences but less helpful in fields (like LIS) that accept a broader range of outputs and audiences. For instance, when Rachel was an early career librarian, she co-produced a library instruction podcast, which had a sizable audience of regular listeners, but was not something that could described in the same impact terms as an academic article. By contrast, altmetrics indicators tend to land at the level of individual researcher outputs—the number of times an article, presentation, or (in Rachel’s case) podcast is viewed online, downloaded, etc.

Altmetrics also opens up the door to researchers who, as mentioned earlier, are engaged in online spaces and networks that include members beyond the academy. Twitter is a common example of this, as are certain blogs, like those directly sponsored by scholarly associations or publishers. Interested members of the general public, as well as professionals outside of academia, are thus acknowledged by altmetrics as potentially valuable audiences, audiences whose ability to access, share, and discuss research opens up new questions about societal engagement with certain types of scholarship. Consider: what would it mean if Rachel had discovered evidence that her regular podcast listeners included teachers as well as librarians? What if Robin saw on Twitter that a communication professor’s research was being discussed by federal policy makers?

A good example of this from the broader LIS world is the case of UK computer scientist Steve Pettifer, whose co-authored article “Defrosting the Digital Library: Bibliographic Tools for the Next Generation Web” was profiled in a 2013 Nature article for the fact that it had been downloaded by Public Library of Science (PLOS) users 53,000 times between 2008 and 2012, as “the most-accessed review ever to be published in any of the seven PLOS journals” ((http://www.nature.com/nature/journal/v500/n7463/full/nj7463-491a.html )). By contrast, Pettifer’s article had at that point in time generated about 80 citations, a number that, while far from insignificant, left uncaptured the degree of interest in his research from a larger online community. The fact that Pettifer subsequently included this metric as part of a successful tenure package highlights one of the main attractions of altmetrics for researchers: the ability to supplement citation-based metrics, and to build a stronger case for evaluators seeking proof of a broad spectrum of impact.

The potential of altmetrics to fill gaps for both audiences and outputs beyond traditional limits has also brought it to the crucial attention of funding agencies, the vast majority of which have missions that tie back to the public good. For instance, in a 2014 article in PLOS Biology, the Wellcome Trust, the second-highest spending charitable foundation in the world, openly explained its interest in “exploring the potential value of [article level metrics]/altmetrics” to shape its future funding strategy ((http://journals.plos.org/plosbiology/article?id=10.1371/journal.pbio.1002003 )). Among the article’s other arguments, it cites the potential for altmetrics to be “particularly beneficial to junior researchers, especially those who may not have had the opportunity to accrue a sufficient body of work to register competitive scores on traditional indicators, or those researchers whose particular specialisms seldom result in key author publications.” Funders, in other words, acknowledge the challenges that (1) early career academics face in proving the potential impact of their ideas; and (2) researchers experience in disciplines that favor a high degree of specialization or collaboration.

As librarians who work in public services, we have both witnessed these challenges in action many times—even in our own field of LIS. Take, for instance, the example of a librarian hoping to publish the results of an information literacy assessment in a high impact LIS journal based on Impact Factor. According to the latest edition of Journal Citations Reports ((This ranking is according to the 2014 edition of Journal Citation Reports, as filtered for the category “Information Science & Library Science.” There is no way to disambiguate this category into further specialty areas. )), the top journal for the category of “Information Science & Library Science” is Management Information Systems Quarterly, a venue that fits poorly with our librarian’s information literacy research. The next two ranked journals, Journal of Information Technology and Journal of the American Medical Informatics Association, offer versions of the same conundrum; neither is appropriate for the scope of the librarian’s work. Thus, the librarian is essentially locked out of the top three ranked journals in the field, not because his/her research is suspect, but because the research doesn’t match a popular LIS speciality. Scenarios like this are very common, and offer weight to the argument that LIS is in need of alternative tools for communicating and contextualizing scholarly impact to external evaluators.

Non-librarians are of course also a key demographic within the LIS field, and have their own set of practices for using metrics for evaluation and review. In fact, for many LIS professionals outside of academia, the use of non-citation based metrics hardly merits a discussion, so accepted are they for tracking value and use. For instance, graduates in programming positions will undoubtedly recognize GitHub, an online code repository and hosting service in which users are rewarded for the number of “forks,” watchers, and stars their projects generate over time. Similarly, LIS grads who work with social media may utilize Klout scores —a web-based ranking that assigns influence scores to users based on data from sites like Twitter, Instagram, and Wikipedia. The information industry has made great strides in flexing its definition of impact to include more social modes of communication, collaboration, and influence. However, as we have seen, academia continues to linger on the notion of citation-based metrics.

This brings us back to the lure of altmetrics for librarians: namely, its potential to redefine, or at least broaden, how higher education thinks about impact, and how impact can be distinguished from additional evaluative notions like “quality.” Our own experiences and observations have led us to believe strongly that this must be done, but also done with eyes open to the ongoing strengths and weaknesses of altmetrics. With this in mind, let us take a closer look at the field of altmetrics, including how it has developed as a movement.

The organization of altmetrics

One of the first key points to know about altmetrics is how it can be organized and understood. Due to its online, entrepreneurial nature, altmetrics as a field can be incredibly quick-changing and dynamic—an issue and obstacle that we’ll return to a bit later. For now, however, let’s take a look a the categories of altmetrics as they currently stand in the literature.

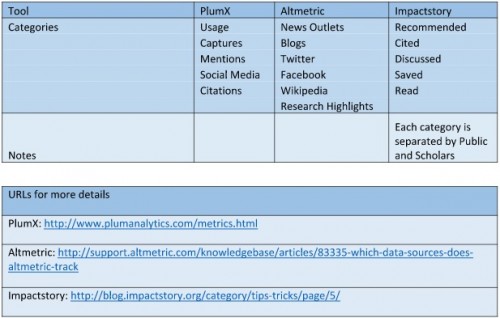

To date, several altmetrics providers have taken the initiative to create categories that are used within their tools. For instance, PlumX, one of the major altmetrics tools, sorts metrics into five categories, including “social media” and “mentions”, both measures of the various likes, favorites, shares and comments that are common to many social media platforms. Impactstory, another major altmetrics tool, divides its basic categories into “Public” and “Scholar” sections based on the audience that is most likely to be represented within a particular tool. To date, there is no best practice when it comes to categorizing these metrics. A full set of categories currently in use by different altmetrics toolmakers can be found in Figure 1.

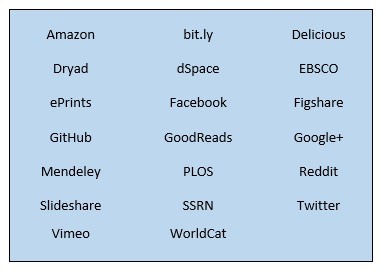

One challenge to applying categories to altmetrics indicators is the ‘grass roots’ way in which the movement was built. Rather than having a representative group of researchers get together and say “let’s create tools to measure the ways in which scholarly research is being used/discussed/etc.”, the movement started with a more or less concurrent explosion of online tools that could be used for a variety of purposes, from social to academic. For example, when a conference presentation is recorded and uploaded to YouTube, the resulting views, likes, shares, and comments are all arguably indications of interest in the presentation’s content, even though YouTube is hardly designed to be an academic impact tool. A sample of similarly flexible tools from which we can collect data relevant to impact is detailed in Figure 2.

Around the mid-2000s ((While the first reference to “altmetrics” arose out of a 2010 tweet by Jason Priem, the idea behind the value of altmetrics was clearly present in the years leading up to the coining of the term. )), people began to realize that online tools could offer valuable insights into the attention and impact of scholarship. Toolmakers thus began to build aggregator resources that purposefully gather data from different social sites and try to present this data in ways that are meaningful to the academic community. However, as it turns out, each tool collects a slightly different set of metrics and has different ideas about how to sort its data, as we saw in Table 1. The result is that there is no inherent rhyme or reason as to why altmetrics toolmakers track certain online tools and not others, nor to what the data they produce looks like. It’s a symptom of the fact that the tools upon which altmetrics are based were not originally created with altmetrics in mind.

Altmetrics tools

Let’s now take a look at some of the altmetrics tools that have proved to be of most use to librarians in pursuit of information about their impact and scope of influence.

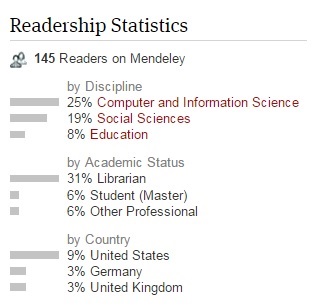

Mendeley

Mendeley is a citation management tool, a category that also includes tools like EndNote, Zotero and RefWorks. These tools’ primary purpose is to help researchers organize citations, as well as to cite research quickly in a chosen style such as APA. Mendeley takes these capabilities one step further by helping researchers discover research through its social networking platform, where users can browse through articles relevant to their interests or create/join a group where they can share research with other users. ((One such group in Mendeley is the Altmetrics group, with close to 1000 members as of July 2015. )) Unique features: When registering for a Mendeley account, a user must submit basic demographic information, including a primary research discipline. As researchers download papers into their Mendeley library, this demographic information is tracked, so we can see overall interest for research articles in Mendeley, along with a discipline-specific breakdown of readers, as shown in Figure 3. ((Another feature that may be of interest to librarians undertaking a lit review – Mendeley can often automatically extract metadata from an article PDF, and can even ‘watch’ a computer folder and automatically add any new PDFs from that folder into the Mendeley library, making research organization relatively painless. ))

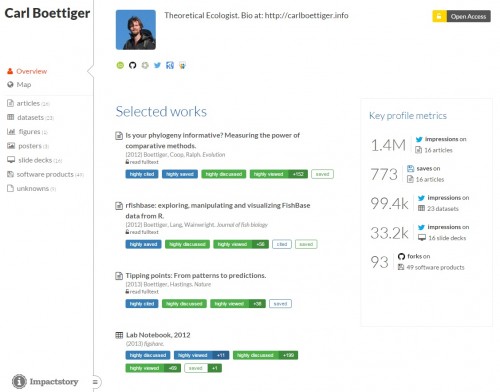

Impactstory

Impactstory is an individual subscription service ($60/year as of writing) that creates a sort of ‘online CV’ supplement for researchers. It works by collecting and displaying altmetrics associated with the scholarly products entered by the researcher into their Impactstory account. As alluded to before, one of the biggest innovations in the altmetrics realm in the past few years has been the creation of aggregator tools that collect altmetrics from a variety of sources and present metrics in a unified way. Unique features: Impactstory is an example of a product targeted specifically at authors, e.g. displaying altmetrics for items authored by just one person. It is of particular interest to many LIS researchers because it can track products that aren’t necessarily journal articles. For example, it can track altmetrics associated with blogs, SlideShare presentations, and YouTube videos, all examples of ways in which many librarians like to communicate and share information relevant to librarianship.

Figure 4. The left-side navigation shows the different types of research products for one Impactstory profile.

PlumX

PlumX is an altmetrics tool specifically designed for institutions. Like Impactstory, it collects scholarly products produced by an institution and then displays altmetrics for individuals, groups (like a lab or a department), and for the entire institution. Unique features: Since PlumX’s parent company Plum Analytics is owned by EBSCO, PlumX is the only tool that incorporates article views and downloads from EBSCO databases. PlumX also includes a few sources that other tools don’t incorporate, such as GoodReads ratings and WorldCat library holdings (both metrics sources that work well for books). PlumX products can be made publicly available, such as the one operated by the University of Pittsburgh at http://plu.mx/pitt.

Altmetric

Altmetric is a company that offers a suite of products, all of which are built on the generation of altmetrics geared specifically at journal articles. Their basic product, the Altmetric Bookmarklet, generates altmetrics data for journal articles with a DOI ((The bookmarklet can be downloaded and installed here: http://www.altmetric.com/bookmarklet.php )), with a visual ‘donut’ display that represents the different metrics found for the article (see Figure 5). Unique features: One product, Altmetric Explorer, is geared toward librarians and summarizes recent altmetrics activity for specific journals. This information can be used to gain more insight into a library’s journal holdings, which can be useful for making decisions about the library’s journal collection.

Figure 5. This Altmetric donut shows altmetrics from several different online tools for one journal article.

Current issues & initiatives

Earlier, we mentioned that one of the primary characteristics of altmetrics is its lack of consistency over time. Indeed, the field has already changed significantly since the word altmetrics first appeared in 2010. Some major changes include: the abandonment of ReaderMeter ((http://readermeter.org/ “It’s been a while… but we’re working to bring back ReaderMeter” has been displayed for several years. )), one of the earliest altmetrics tools; shifting funding models, including the acquisition of PlumX by EBSCO in January 2014 and the implementation of an Impactstory subscription fee in July 2014; and the adoption of altmetrics into well-established scholarly tools and products such as Scopus, Nature journals, and most recently, Thomson Reuters ((Thomson Reuters is currently beta-testing inclusion of “Item Level Usage Counts” into Web of Science, similar to EBSCO’s tracking of article views and downloads. More information on this feature can currently be found in the form of webinars and other events. )).

One exciting initiative poised to bring additional clarity to the field is the NISO (National Information Standards Organization) Altmetrics Initiative. Now in its final stage, the Initiative has three working groups collaborating on a standard definition of altmetrics, use cases for altmetrics, and standards associated with the quality of altmetrics data and the way in which altmetrics are calculated. Advocates of altmetrics (ourselves included) have expressed hope that the NISO Initiative will help bring more stability to this field, and answer confusion associated with the lack of altmetrics standardization.

Criticisms also make up a decent proportion of the conversation about altmetrics. One of the most well know is the possibility of ‘gaming’, or of users purposefully inflating altmetrics data. For example, a researcher could ‘spam’ Twitter with links to their article, or could load the article’s URL many times, which would both increase the metrics associated with their article. We’ve heard about such fears from users before, and they are definitely worth keeping in mind when evaluating altmetrics. However, it’s also fair to say that toolmakers are taking measures to counteract this worry—Altmetric, for example, automatically eliminates tweets that appear auto-generated. Still, more sophisticated methods for detecting and counteracting this kind of activity will eventually help build confidence and trust in altmetrics data.

Another criticism associated with altmetrics is one it shares with traditional citation-based metrics: the ability to accurately and fairly measure the scholarly impact of every discipline. As we’ve seen, many altmetrics tools still focus on journal articles as the primary scholarly output, but for some disciplines, articles are not the only way (or even the main way) in which researchers in that discipline are interacting. Librarianship is a particularly good example of this disciplinary bias. Since librarianship is a ‘discipline of practice’, so to speak, our day-to-day librarian responsibilities are often heavily influenced by online webinars, conference presentations, and even online exchanges via Twitter, blogs, and other social media. Some forms of online engagement can be captured with altmetrics, but many interactions are beyond the scope of what can be measured. For example, when the two of us present at conferences, we try our best to collect a few basic metrics: audience count; audience assessments; and Twitter mentions associated with the presentation. We also upload presentation materials to the Web when possible, to capture post-presentation metrics (e.g. presentation views on SlideShare). In one case, we did a joint talk that was uploaded to YouTube, which meant we could monitor video metrics over time. However, one of the most poignant impact indicators, evidence that a librarian has used the information presented in their own work, is still unlikely to be captured by any of these metrics. Until researchers can say with some certainty that online engagement is an accurate reflection of disciplinary impact, these metrics will always be of limited use when trying to measure true impact.

Finally, there is a concern growing amongst academics regarding the motivations of those pushing the altmetrics movement forward—namely, a concern that the altmetrics toolmakers are the ‘loudest’ voice in the conversation, and are thus representing business concerns rather than the larger concerns of academia. This criticism is actually one that we think strongly speaks to the need for additional librarian involvement in altmetrics on behalf of academic stakeholders, to ensure that their needs are addressed. One good example of librarians representing academia is at the Charleston Conference, where vendors and librarians frequently present together and discuss future trends in the field.

There are thus many uncertainties inherent to the current state of altmetrics. Nevertheless, such concerns do not overshadow the real shift that altmetrics represents in the way that academia measures and evaluates scholarship. Put in this perspective, it is little wonder that many researchers and librarians have found the question of how to improve and develop altmetrics over time to be ultimately worthwhile.

Role of LIS graduates and librarians

As mentioned at the beginning of this article, new academic librarians are in need of altmetrics for the same reasons as all early career faculty: to help track their influence and demonstrate the value of their diverse portfolios. However, the role of LIS graduates relative to altmetrics is also a bit unique, in that many of us also shoulder a second responsibility, which may not be obvious at first to early career librarians. This responsibility goes back to the central role that librarians have played in the creation, development, and dissemination of research metrics since the earliest days of citation-based analysis. Put simply: librarians are also in need of altmetrics in order to provide robust information and support to other researchers—researchers who, more often than not, lack LIS graduates’ degree of training in knowledge organization, information systems, and scholarly communication.

The idea that LIS professionals can be on the front lines of support for impact measurement is nothing new to experienced academic librarians, particularly those in public services roles. According to a 2013 survey of 140 libraries at institutions across the UK, Ireland, Australia and New Zealand, 78.6% of respondents indicated that they either offer or plan to offer, “bibliometric training” services to their constituents as part of their support for research ((Sheila Corrall. and Mary Anne Kennan. and Waseem Afzal. “Bibliometrics and Research Data Management Services: Emerging Trends in Library Support for Research.” Library Trends 61.3 (2013): 636-674. Project MUSE. Web. 14 Jul. 2015. http://muse.jhu.edu/journals/library_trends/v061/61.3.corrall02.html. )). Dozens of librarian-authored guides on the subject of “research impact,” “bibliometrics,” and “altmetrics” can likewise be found through Google searches. What’s more, academic libraries are increasingly stepping up as providers of alternative metrics. For example, many libraries collect and display usage statistics for objects in their institutional repositories.

Still, for early career librarians, the thought of jumping into the role of “metrics supporter” can be intimidating, especially if undertaken without a foundation of practical experience on the subject. This, again, is a reason that the investigation of altmetrics from a personal perspective is key for new LIS graduates. Not only does it help new librarians consider how different definitions of impact can shape their own careers, but it also prepares them down the line to become advocates for appropriate definitions of impact when applied to other vulnerable populations of researchers, academics, and colleagues.

Obstacles & opportunities

Admittedly, there are several obstacles for new librarians who are considering engaging with research metrics. One of the biggest obstacles is the lack of discourse in many LIS programs concerning methods for measuring research impact. Metrics are only one small piece of a much larger conversation concerning librarian status as academic institutions, and the impact that status has on scholarship expectations, so it’s not a shock that this is a subject that isn’t routinely covered by LIS programs. Regardless, it’s an area for which many librarians may feel underprepared.

Another barrier that can prevent new librarians from engaging with altmetrics is the hesitation to position oneself as an expert in the area when engaging with stakeholders, including researchers, vendors, and other librarians ((The authors can attest that, even after publishing a book on the topic, this is an obstacle that may never truly be overcome! )). These kinds of mental barriers are nearly universal among professionals ((One recent study found that 1 in 8 librarians had higher than average levels of Imposter Syndrome, a rate which increases amongst newer librarians. )) and are somewhere within the domains of nearly every institution ((An overview of one stakeholders’ perceptions of libraries and librarians, namely that of faculty members, is explored in the Ithaka Faculty Survey. )). At Rachel’s institution, for example, the culture regarding impact metrics has been relatively conservative and dominated by Impact Factor, so she’s been cautious with introducing new impact-related ideas, serving more as a source of information for researchers who seek assistance rather than a constant activist for new metrics standards.

Luckily, for every barrier that new LIS grads face in cultivating a professional relationship with altmetrics, there is almost always a balancing opportunity. For example, newly hired academic librarians almost inevitably find themselves in the position of being prized by colleagues for their “fresh perspective” on certain core issues, from technology to higher education culture ((The value of new graduates’ “fresh perspective” well known anecdotally, but also well-evidenced by the proliferation of Resident Librarian positions at academic libraries across the country. )). During this unique phase of a job, newly-hired librarians may find it surprisingly comfortable—even easy!—to bring new ideas about research impact or support services to the attention of other librarians and local administrators.

Another advantage that some early career librarians have in pursuing and promoting altmetrics is position flexibility. Librarians who are new to an institution tend to have the option to help shape their duties and roles over time. Early career librarians are also generally expected by their libraries to devote a regular proportion of their time to the goal of professional development, an area for which the investigation of altmetrics fits nicely, both as a practical skill and a possible topic of institutional expertise.

For those librarians who are relatively fresh out of a graduate program, relationships with former professors and classmates can also offer a powerful opportunity for collecting and sharing knowledge about altmetrics. LIS cohorts have the advantage (outside of some job competition) of entering the field at more or less the same time, a fact that strengthens bonds between classmates, and can translate into a long term community of support and information sharing. LIS teaching faculty may also be particularly interested in hearing from recent graduates about the skills and topics they value as they move through the first couple years of a job. Communicating back to these populations is a great way to affect change across existing networks, as well as to prepare for the building of new networks around broad LIS issues like impact.

Making plans to move forward

Finding the time to learn more about altmetrics can seem daunting as a new librarian, particularly how it relates to other “big picture” LIS topics like scholarly communication, Open Access, bibliometrics, and data management. However, this cost acknowledged, altmetrics is a field that quickly rewards those who are willing to get practical with it. Consequently, we recommend setting aside some time in your schedule to concentrate on three core steps for increasing your awareness and understanding of altmetrics.

#1. Pick a few key tools and start using them.

A practical approach to altmetrics means, on a basic level, practicing with the tools. Even librarians who have no desire to become power users of Twitter can learn a lot about the tool by signing up for a free account and browsing different feeds and hashtags. Likewise, librarians curious about what it’s like to accumulate web-based metrics can experiment with uploading one of their PowerPoint presentations to SlideShare, or a paper to an institutional repository. Once you have started to accumulate an online professional identity through these methods, you can begin to track interactions by signing up for a trial of an altmetrics aggregation tool like Impactstory, or a reader-oriented network like Mendeley. Watching how your different contributions do (or do not!) generate altmetrics over time will tell you a lot about the pros and cons of using altmetrics—and possibly about your own investment in specific activities. Other recommended tools to start: see Table One for more ideas.

#2. Look out for altmetrics at conferences and events.

Low stakes, opportunistic professional development is another excellent strategy for getting comfortable with altmetrics as an early career librarian. For example, whenever you find yourself at a conference sponsored by ALA, ACRL, SLA, or another broad LIS organization, take a few minutes to browse the schedule for any events that mention altmetrics or research impact. More and more, library and higher education conferences are offering attendees sessions related to altmetrics, whether theoretical or practical in nature. Free webinars that touch on altmetrics are also frequently offered by technology-invested library sub-groups like LITA. Use the #altmetrics hashtag on Twitter to help uncover some of these opportunities, or sign up for a email listserv and let them them come to you.

#3. Commit to reading a shortlist of altmetrics literature.

Not surprisingly, reading about altmetrics is one of the most effective things librarians can do to become better acquainted with the field. However, whether you choose to dive into altmetrics literature right away, or wait to do so until after you have experienced some fundamental tools or professional development events, the important thing to do is to give yourself time to read not one or two, but several reputable articles, posts, or chapters about altmetrics. The reason for doing this goes back to the set of key issues at stake in the future of altmetrics. Exposure to multiple written works, ideally authored by different types of experts, will give librarians new to altmetrics a clearer, less biased sense of the worries and ambitions of various stakeholders. For example, we’ve found the Society for Scholarly Publishing’s blog Scholarly Kitchen to be a great source of non-librarian higher education perspectives on impact measurement and altmetrics. Posts on tool maker blogs, like those maintained by Altmetric and Impactstory, have likewise proved to be informative, as they tend to respond quickly to major controversies or innovations in the field. Last but not least, scholarly articles on altmetrics are now widely available now—and can be easily discovered via online bibliographies or good old fashioned search engine sleuthing.

As you can imagine, beginning to move forward with altmetrics can take as little as a few minutes—but becoming well-versed in the subject can take the better part of an academic year. In the end, the decision of how and when to proceed will probably shift with your local circumstances. However, making altmetrics part of your LIS career path is an idea we hope you’ll consider, ideally sooner rather than later.

Conclusion

Over the last five years, altmetrics has emerged in both libraries and higher education as a means of tracing attention and impact; one that reflects the ways that many people, both inside and outside of academia, seek and make decisions about information every day. For this reason, this article has argued that librarians should consider the potential value of altmetrics to their careers as soon as possible (e.g. in their early careers), using a variety of web-based indicators and services to help inform them of their growing influence as LIS professionals and scholars.

Indeed, altmetrics as a field is in a state of development not unlike that of an early career librarian. It’s future, for instance, is also marked by some questions and uncertainties—definitions that have yet to emerge, disciplines with which it has yet to engage, etc. And yet, despite these hurdles, both altmetrics and new academic librarians share the power over time to change the landscape of higher education in ways that have yet to be fully appreciated. It is this power and value that the reader should remember when it comes to altmetrics and their use.

And so, new graduates, please meet altmetrics. We think the two of you are going to get along just fine.

Thanks to the In the Library with the Lead Pipe team for their guidance and support in producing this article. Specific thanks to our publishing editor, Erin Dorney, our internal peer reviewer, Annie Pho, and to our external reviewer, Jennifer Elder. You each provided thoughtful feedback and we couldn’t have done it without you.

Recommended Resources

Altmetrics.org. http://altmetrics.org/

Altmetrics Conference. http://www.altmetricsconference.com/

Altmetrics Workshop. http://altmetrics.org/altmetrics15/

Chin Roemer, Robin & Borchardt, Rachel. Meaningful Metrics: A 21st Century Librarian’s Guide to Bibliometrics, Altmetrics, & Research Impact. ACRL Press, 2015.

Mendeley Altmetrics Group. https://www.mendeley.com/groups/586171/altmetrics/

NISO Altmetrics Initiative. http://www.niso.org/topics/tl/altmetrics_initiative/

The Scholarly Kitchen. http://scholarlykitchen.sspnet.org/tag/altmetrics/

WUSTL Becker Medical Library, “Assessing the Impact of Research.” https://becker.wustl.edu/impact-assessment

Pingback : New Grads, Meet New Metrics: Why Early Career Librarians Should Care About Altmetrics & Research Impact | bluesyemre

Pingback : Latest Library Links, 28th August 2015 | Latest Library Links

Pingback : “Settling for a job” and “upward mobility”: today’s career paths for librarians | LITA Blog